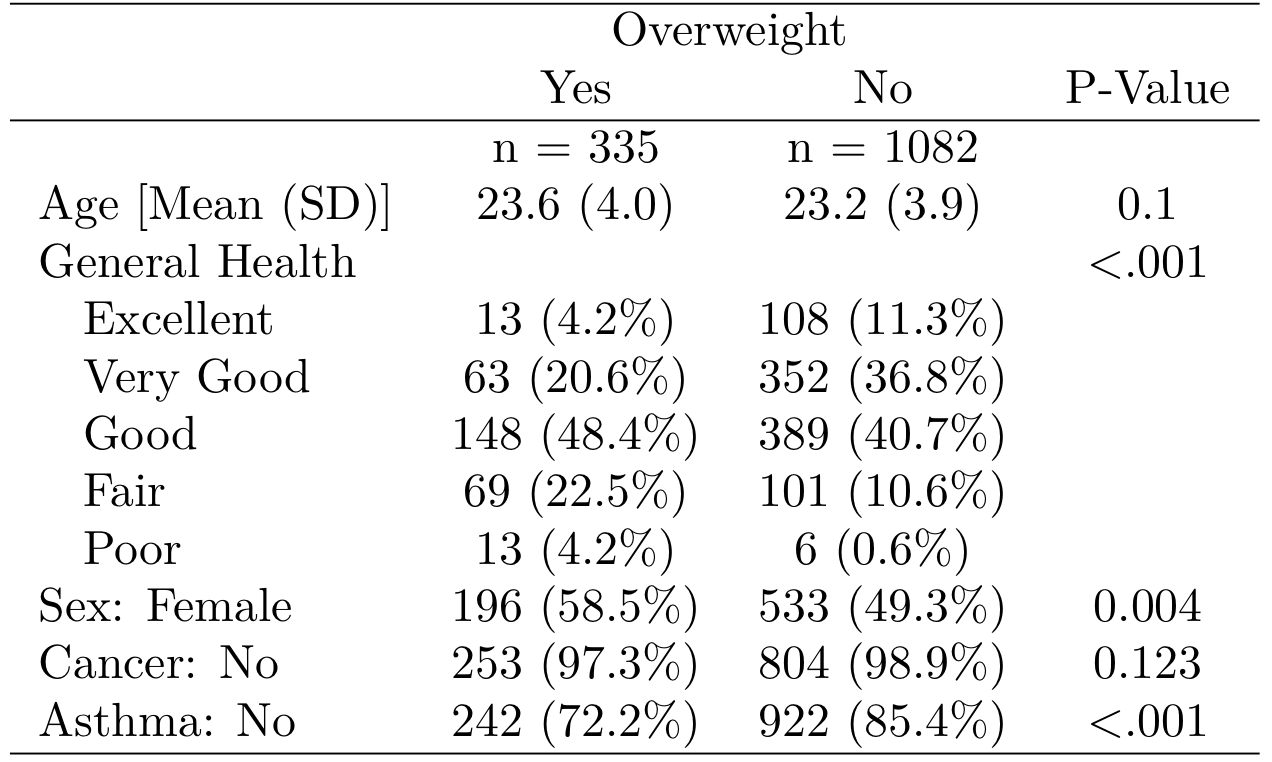

class: center, middle, inverse, title-slide # Intro to Statistics ## Cohen Chapter 1 <br><br> .small[EDUC/PSY 6600] --- class: inverse, middle, center # Background Information --- background-image: url(figures/fig_scale_variable.png) background-position: 50% 80% background-size: 600px # Scale vs. Variable --- # Scale vs. Variable .pull-left[ ## MEASUREMENT SCALE .large[ - .coral[Nominal] = named groupings, no meaningful order - .dcoral[Ordinal] = groupings that do have natural order - .nicegreen[Interval] = precise units that are equally spaced - .bluer[Ratio] = interval + true zero point ]] .pull-right[ ## VARIABLE TYPE .large[ - .coral[Categorical] = finite, countable number of levels, no intermediate values possible - .nicegreen[Numeric] = infinite intermediate values are possible, at least in theory ] *NOTE: due to limits on measurement precision, observed data may be discrete, even though the underlying construct is continuous* ] --- background-image: url(figures/fig_obs_exp.jpg) background-position: 50% 90% background-size: 900px # Observational vs. Experimental --- # Rounding Numbers ### Rules .large[ - If you want to round to N decimal places, look at the digit in the `\(N + 1\)` place… - If it is LESS than 5 -> do not change the digit in the Nth place - If it is MORE than 5 -> increase the digit in the Nth place by 1 - If it is EQUAL to 5 AND there are no non-zero digits to the right, -> increase the digit in the Nth place by 1 ONLY IF the Nth digit is ODD (do not change it if it is EVEN) In all cases, the last step is to drop the digit in the `\(N+1\)` place and other digits to the right ] -- .center[.huge[.coral[Examples]]] ??? Round to two decimal places - `\(65.3 = 65.30\)` - `\(8/3 = 2.67\)` - `\(0.4255 = 0.43\)` - `\(0.4358 = 0.44\)` - `\(0.425 = 0.42\)` - `\(0.435 = 0.44\)` --- background-image: url(figures/fig_summation_notation.png) background-position: 50% 50% background-size: 500px # Summation Notation <br><br><br><br><br><br><br><br><br><br><br><br><br> .center[.Huge[.coral[Examples]]] ??? Some examples up on the board: $$ \frac{1}{5}\sum_{i = 1}^5 x_i $$ $$ \sum_{i = 1}^2 (x_i + y_i) $$ $$ \sum_{i = 1}^n y_i $$ $$ \sum_{i = 1}^2 (x_i \times y_i) $$ --- # Summation Rules .pull-left[ #### Number 1 $$ \sum (X_i + Y_i) = \sum X_i + \sum Y_i $$ $$ \sum (X_i - Y_i) = \sum X_i - \sum Y_i $$ #### Number 2 $$ \sum_{i=1}^n C = nC $$ #### Number 3 $$ \sum CX_i = C \sum X_i $$ ] -- .pull-right[ #### Number 4 $$ \sum (X_i \times Y_i) \neq \sum X_i \times \sum Y_i $$ ] --- class: inverse, middle, center # Intro to R --- # Download `R` and `RStudio` <br> .huge[If `R` is like a car's engine, then `RStudio` is the steering wheel, the pedals, and the comfortable seat] -- .pull-left[.huge[.center[ `R` [www.r-project.org](https://cran.cnr.berkeley.edu/) ]]] .pull-right[.huge[.center[ `RStudio` [www.rstudio.com](https://www.rstudio.com/products/rstudio/download/) ]]] --- # Focus on what is needed in `R` <br> .center[.Huge[ ".dcoral[Success] is neither magical nor mysterious. Success is the natural consequence of consistently applying the .nicegreen[basic fundamentals]." --- Jim Rohn ]] .footnote[https://www.brainyquote.com/quotes/jim_rohn_122132?src=t_fundamentals] --- # Intro to `R` .pull-left[.huge[ Why Use `R`? ] .large[ - .coral[**Free**] - Almost always up-to-date - Best .bluer[data visualizations] - .nicegreen[Syntax oriented] (easy to reproduce analyses) - Gets updated regularly - You can make your .gray1[own functionality] (e.g., `table1()`) ]] -- .pull-right[ .huge[`table1()` produces 👇] <br>  ] --- count: false # Intro to `R` .pull-left[.huge[ Why Use `R`? ] .large[ - .coral[**Free**] - Almost always up-to-date - Best .bluer[data visualizations] - .nicegreen[Syntax oriented] (easy to reproduce analyses) - Gets updated regularly - You can make your .gray1[own functionality] (e.g., `table1()`) ]] .pull-right[.huge[ Any Issues? ] .large[ - Learning curve - Too extensive (people often focus on too much) - Advisor's sometimes don't use it The pro's heavily outweight the con's *if* you are willing to use it. It can save you tons of time in the long-run. ]] --- .pull-left[ ## .coral[Objects] .large[.large[ Virtual objects are like physical objects (e.g., a car is good to travel in, a table not so much) `R` uses virtual objects (`data.frame`, `vectors`) ]] ```r x <- 5 ``` ```r x = 5 ``` ] -- .pull-right[ ## .bluer[Vectors] and .nicegreen[Data Frames] .large[.large[ - a `vector` is a single variable (can be numeric, character, or factor) - a `data.frame` is a group of vectors (variables) ]] ```r df ``` ``` > x y > 1 5 1 > 2 3 1 > 3 4 0 > 4 1 0 ``` ] --- # Functions .huge[Its how we do stuff in `R`] .pull-left[.large[ Generally looks like: - `stuff(arg1, arg2)` - `stuff` is the function's name - `()` surround the arguments that go in the function -`arg1` etc. are the pieces of information we give a function ]] -- .pull-right[ .large[Here's an example of the "mean" function: - Using our `df` data.frame from before - we grab a single variable from `df` using `$` - `mean()` wants a single variable and it provides the average ] ```r ## This is a comment mean(df$x) ``` ``` ## [1] 3.25 ``` ] --- # Important first steps in `R` .large[.large[ 1. Read in Data 2. Quickly assess the data 3. Clean the data 4. Analyze the data ]] -- .large[We'll show each of these over the next few weeks starting with reading in, assessing and cleaning the data] --- # Read in the data .huge[Data comes in various files:] .pull-left[.large[ - CSV - tab-delimited - SPSS - Excel ]] .pull-right[.large[ - SAS - Stata - Mplus - etc. ]] -- ### `R` can read all types -- .huge[Generally, it all works in a similar way] --- # Read in data - .large[If your data is called `my_data_file.csv`, then:] ```r my_data <- rio::import("my_data_file.csv") ``` -- - .large[If your data is called `my_data_file.txt` (and is tab-delimited), then:] ```r my_data <- rio::import("my_data_file.txt", sep="\t", header=TRUE) ``` -- - .large[If your data is an SPSS file, then:] ```r my_data <- rio::import("my_data_file.sav") ``` --- background-image: url(figures/fig_inho_data_desc.png) background-position: 50% 90% background-size: 800px # Let's use the data from the book --- # Steps for .dcoral[Preparing] the Data for Analysis .large[.large[ 1. .coral[Get the data] 2. .nicegreen[Prep the data] - Variable Labels - Value Labels - Missing Values 3. .bluer[Compute] new variables and values (fill in missing codes, recode scores, categorize/group values, combine) 4. Get .dcoral[descriptives] using `tableF()` and `table1()` from the `furniture` package to check what is going on ]] --- # Step 1: Get the data ```r ## Data is in .sav form (SPSS) library(rio) d <- rio::import("Data/Ihno_dataset.sav") d ``` ``` ## Sub_num Gender Major Reason Exp_cond Coffee Num_cups Phobia Prevmath ## 1 1 1 1 3 1 1 0 1 3 ## 2 2 1 1 2 1 0 0 1 4 ## 3 3 1 1 1 1 0 0 4 1 ## 4 4 1 1 1 1 0 0 4 0 ## 5 5 1 1 1 1 0 1 10 1 ## 6 6 1 1 1 2 1 1 4 1 ## 7 7 1 1 1 2 0 0 4 2 ## 8 8 1 1 3 2 1 2 4 1 ## 9 9 1 1 1 2 0 0 4 1 ## 10 10 1 1 1 2 1 2 5 0 ## 11 11 1 1 1 2 0 1 5 1 ## 12 12 1 1 1 2 0 0 4 0 ## 13 13 1 1 1 2 0 1 7 0 ## 14 14 1 1 3 3 0 2 4 1 ## 15 15 1 1 1 4 1 3 3 1 ## 16 16 1 1 1 4 1 0 8 0 ## 17 17 1 1 1 4 0 0 4 1 ## 18 18 1 1 1 4 1 3 5 1 ## 19 19 1 1 3 4 1 2 0 3 ## 20 20 1 2 1 2 1 1 4 1 ## 21 21 1 2 2 2 1 2 4 0 ## 22 22 1 2 1 3 1 0 3 1 ## 23 23 1 2 1 3 0 2 4 1 ## 24 24 1 2 2 2 1 2 0 3 ## 25 25 1 2 2 3 1 2 1 3 ## 26 26 1 2 2 4 0 0 1 4 ## 27 27 1 2 2 4 1 2 0 6 ## 28 28 1 2 3 1 1 3 4 2 ## 29 29 1 2 1 1 0 0 3 2 ## 30 30 1 2 3 1 1 0 5 1 ## 31 31 1 3 1 1 1 1 9 1 ## 32 32 1 3 1 3 1 3 3 0 ## 33 33 1 3 1 4 1 2 4 1 ## 34 34 1 3 2 1 1 1 1 1 ## 35 35 1 3 2 1 0 0 2 1 ## 36 36 1 3 2 2 1 1 0 0 ## 37 37 1 3 2 4 1 2 3 1 ## 38 38 1 3 3 1 0 0 2 0 ## 39 39 1 3 3 2 0 1 8 1 ## 40 40 1 3 3 4 0 0 4 1 ## 41 41 1 3 3 4 0 0 4 1 ## 42 42 1 4 2 1 0 0 0 1 ## 43 43 1 4 2 2 1 0 3 1 ## 44 44 1 4 2 4 0 0 2 1 ## 45 45 1 4 2 4 0 0 2 1 ## 46 46 1 4 2 4 0 1 1 0 ## 47 47 1 4 3 1 0 0 1 1 ## 48 48 1 4 3 1 1 1 5 0 ## 49 49 1 4 3 2 1 1 5 1 ## 50 50 1 4 3 3 0 0 4 1 ## 51 51 1 4 3 3 0 0 4 1 ## 52 52 1 4 3 4 1 0 10 0 ## 53 53 1 4 3 4 0 0 7 1 ## 54 54 1 5 1 3 1 1 3 5 ## 55 55 1 5 1 3 1 2 4 1 ## 56 56 1 5 2 4 0 1 2 2 ## 57 57 1 5 3 1 0 0 2 0 ## 58 58 2 1 1 1 1 1 1 1 ## 59 59 2 1 2 1 0 0 7 0 ## 60 60 2 1 1 2 0 1 5 0 ## 61 61 2 1 1 3 1 1 5 1 ## 62 62 2 1 1 3 0 0 1 2 ## 63 63 2 1 1 3 0 0 3 0 ## 64 64 2 1 1 4 0 0 3 1 ## 65 65 2 1 1 4 0 1 5 1 ## 66 66 2 1 2 2 0 0 1 4 ## 67 67 2 1 3 1 0 0 0 3 ## 68 68 2 2 1 2 0 0 5 0 ## 69 69 2 2 1 3 0 0 6 2 ## 70 70 2 2 1 3 1 1 3 2 ## 71 71 2 2 1 4 1 1 5 0 ## 72 72 2 2 1 4 1 2 4 2 ## 73 73 2 2 2 3 0 0 0 4 ## 74 74 2 2 2 3 0 0 2 4 ## 75 75 2 2 2 4 0 0 2 3 ## 76 76 2 2 3 1 0 0 3 1 ## 77 77 2 2 1 2 1 1 1 2 ## 78 78 2 2 3 2 0 0 1 3 ## 79 79 2 2 3 3 1 1 0 2 ## 80 80 2 2 3 3 0 0 2 2 ## 81 81 2 2 3 4 0 1 10 0 ## 82 82 2 3 1 1 0 0 3 1 ## 83 83 2 3 1 1 0 1 3 1 ## 84 84 2 3 1 1 1 1 8 1 ## 85 85 2 3 1 1 1 0 3 1 ## 86 86 2 3 3 2 0 0 3 1 ## 87 87 2 3 2 2 1 0 1 0 ## 88 88 2 3 2 3 0 0 1 4 ## 89 89 2 3 3 2 0 0 2 0 ## 90 90 2 3 3 3 0 0 8 1 ## 91 91 2 3 3 3 1 1 3 1 ## 92 92 2 4 2 1 0 0 0 1 ## 93 93 2 4 2 4 0 0 0 2 ## 94 94 2 4 3 3 1 2 4 0 ## 95 95 2 5 3 3 0 0 7 2 ## 96 96 2 5 2 2 1 1 0 1 ## 97 97 2 5 2 3 0 0 0 2 ## 98 98 2 5 2 3 0 0 2 2 ## 99 99 2 5 2 4 1 1 1 4 ## 100 100 2 5 1 2 0 0 2 2 ## Mathquiz Statquiz Exp_sqz Hr_base Hr_pre Hr_post Anx_base Anx_pre ## 1 43 6 7 71 68 65 17 22 ## 2 49 9 11 73 75 68 17 19 ## 3 26 8 8 69 76 72 19 14 ## 4 29 7 8 72 73 78 19 13 ## 5 31 6 6 71 83 74 26 30 ## 6 20 7 6 70 71 76 12 15 ## 7 13 3 4 71 70 66 12 16 ## 8 23 7 7 77 87 84 17 19 ## 9 38 8 7 73 72 67 20 14 ## 10 NA 7 6 78 76 74 20 24 ## 11 29 8 10 74 72 73 21 25 ## 12 32 8 7 73 74 74 32 35 ## 13 18 1 3 73 76 78 19 23 ## 14 NA 5 4 72 83 77 18 27 ## 15 21 8 6 72 74 68 21 27 ## 16 NA 3 1 76 76 79 14 18 ## 17 37 8 7 68 67 74 15 19 ## 18 37 7 4 77 78 73 39 39 ## 19 32 10 9 74 74 75 20 12 ## 20 NA 7 7 74 75 73 15 11 ## 21 25 7 6 74 84 77 19 27 ## 22 22 4 3 73 71 79 18 13 ## 23 35 8 7 71 74 76 18 22 ## 24 47 8 7 75 75 71 23 28 ## 25 41 6 6 76 73 72 18 24 ## 26 26 7 6 71 76 75 14 10 ## 27 39 8 8 74 79 79 17 12 ## 28 21 7 8 78 79 73 18 13 ## 29 NA 7 9 70 63 66 18 12 ## 30 22 4 7 73 78 69 21 14 ## 31 21 8 8 75 83 73 18 21 ## 32 NA 7 6 78 76 84 24 27 ## 33 26 8 7 76 74 81 17 26 ## 34 20 8 9 76 69 71 17 25 ## 35 30 6 9 69 69 64 22 16 ## 36 40 8 9 77 79 74 21 14 ## 37 35 8 7 78 73 78 19 12 ## 38 10 7 8 74 72 72 15 21 ## 39 35 6 5 71 70 75 20 27 ## 40 44 6 4 67 67 73 12 19 ## 41 26 7 5 77 78 78 21 15 ## 42 NA 9 11 71 72 67 20 23 ## 43 15 3 4 76 79 71 19 21 ## 44 42 7 7 69 70 64 13 24 ## 45 33 8 7 72 64 68 20 14 ## 46 29 6 6 72 79 76 22 27 ## 47 39 7 8 71 63 66 15 21 ## 48 38 8 8 71 82 79 20 26 ## 49 NA 5 4 75 76 70 16 18 ## 50 24 7 6 74 76 75 22 27 ## 51 NA 7 5 74 74 69 30 36 ## 52 26 5 2 78 80 80 19 24 ## 53 14 5 3 68 78 73 20 29 ## 54 45 9 9 76 79 75 15 8 ## 55 28 8 8 73 78 77 19 13 ## 56 31 8 7 74 78 82 27 21 ## 57 NA 8 9 72 67 67 16 20 ## 58 32 8 10 74 84 76 17 19 ## 59 15 3 6 73 73 71 19 16 ## 60 26 8 7 73 74 76 16 20 ## 61 26 8 7 80 82 86 18 27 ## 62 32 5 5 67 68 73 20 25 ## 63 21 8 7 65 75 69 26 29 ## 64 43 8 8 71 72 76 18 13 ## 65 NA 8 5 72 75 68 21 26 ## 66 34 9 11 70 73 65 11 19 ## 67 33 10 11 72 67 68 16 25 ## 68 30 7 7 69 70 74 18 15 ## 69 46 8 6 68 74 71 16 10 ## 70 NA 7 7 76 79 71 19 13 ## 71 33 7 4 68 71 77 18 21 ## 72 29 7 6 76 79 71 22 15 ## 73 30 9 10 69 70 74 15 21 ## 74 34 8 7 68 70 69 18 21 ## 75 32 9 8 70 62 71 17 23 ## 76 37 6 8 70 73 77 15 23 ## 77 NA 8 10 69 67 64 14 17 ## 78 31 7 8 64 74 70 22 25 ## 79 30 8 8 68 72 71 10 13 ## 80 28 5 5 71 74 67 15 17 ## 81 14 6 3 69 78 76 20 25 ## 82 9 7 8 71 69 76 13 10 ## 83 11 4 7 72 83 73 14 16 ## 84 30 6 7 76 78 71 15 18 ## 85 15 5 7 76 67 71 18 12 ## 86 32 3 5 72 70 67 15 9 ## 87 22 5 4 74 78 72 20 27 ## 88 NA 8 9 70 71 69 10 16 ## 89 25 6 7 68 70 73 16 20 ## 90 18 4 3 71 73 77 15 19 ## 91 11 4 3 72 75 76 24 17 ## 92 11 6 9 71 78 72 16 21 ## 93 37 9 9 72 71 73 17 11 ## 94 28 7 6 73 77 79 24 18 ## 95 NA 8 6 69 70 71 23 28 ## 96 33 7 9 74 73 71 17 18 ## 97 28 8 9 70 66 65 17 12 ## 98 38 9 10 65 65 69 18 14 ## 99 41 8 8 72 68 73 17 11 ## 100 39 7 7 70 70 64 17 11 ## Anx_post ## 1 20 ## 2 16 ## 3 15 ## 4 16 ## 5 25 ## 6 19 ## 7 17 ## 8 22 ## 9 17 ## 10 19 ## 11 22 ## 12 33 ## 13 20 ## 14 28 ## 15 22 ## 16 21 ## 17 18 ## 18 40 ## 19 18 ## 20 20 ## 21 23 ## 22 19 ## 23 25 ## 24 24 ## 25 26 ## 26 18 ## 27 16 ## 28 16 ## 29 14 ## 30 17 ## 31 23 ## 32 25 ## 33 15 ## 34 19 ## 35 18 ## 36 19 ## 37 17 ## 38 16 ## 39 22 ## 40 17 ## 41 15 ## 42 21 ## 43 17 ## 44 22 ## 45 22 ## 46 24 ## 47 13 ## 48 26 ## 49 23 ## 50 23 ## 51 32 ## 52 24 ## 53 30 ## 54 17 ## 55 18 ## 56 24 ## 57 22 ## 58 14 ## 59 16 ## 60 17 ## 61 20 ## 62 24 ## 63 23 ## 64 16 ## 65 27 ## 66 9 ## 67 15 ## 68 15 ## 69 17 ## 70 15 ## 71 19 ## 72 20 ## 73 17 ## 74 19 ## 75 19 ## 76 14 ## 77 15 ## 78 19 ## 79 15 ## 80 17 ## 81 23 ## 82 13 ## 83 15 ## 84 17 ## 85 17 ## 86 13 ## 87 18 ## 88 14 ## 89 19 ## 90 21 ## 91 21 ## 92 15 ## 93 17 ## 94 20 ## 95 24 ## 96 17 ## 97 13 ## 98 19 ## 99 18 ## 100 14 ``` --- # Step 2: Prep the data ```r ## install.packages("tidyverse") library(tidyverse) d_clean <- d %>% mutate(Major = factor(Major, labels = c("Psychology", "Premed", "Biology", "Sociology", "Economics"))) %>% mutate(Coffee = factor(Coffee)) d_clean ``` ``` ## Sub_num Gender Major Reason Exp_cond Coffee Num_cups Phobia ## 1 1 1 Psychology 3 1 1 0 1 ## 2 2 1 Psychology 2 1 0 0 1 ## 3 3 1 Psychology 1 1 0 0 4 ## 4 4 1 Psychology 1 1 0 0 4 ## 5 5 1 Psychology 1 1 0 1 10 ## 6 6 1 Psychology 1 2 1 1 4 ## 7 7 1 Psychology 1 2 0 0 4 ## 8 8 1 Psychology 3 2 1 2 4 ## 9 9 1 Psychology 1 2 0 0 4 ## 10 10 1 Psychology 1 2 1 2 5 ## 11 11 1 Psychology 1 2 0 1 5 ## 12 12 1 Psychology 1 2 0 0 4 ## 13 13 1 Psychology 1 2 0 1 7 ## 14 14 1 Psychology 3 3 0 2 4 ## 15 15 1 Psychology 1 4 1 3 3 ## 16 16 1 Psychology 1 4 1 0 8 ## 17 17 1 Psychology 1 4 0 0 4 ## 18 18 1 Psychology 1 4 1 3 5 ## 19 19 1 Psychology 3 4 1 2 0 ## 20 20 1 Premed 1 2 1 1 4 ## 21 21 1 Premed 2 2 1 2 4 ## 22 22 1 Premed 1 3 1 0 3 ## 23 23 1 Premed 1 3 0 2 4 ## 24 24 1 Premed 2 2 1 2 0 ## 25 25 1 Premed 2 3 1 2 1 ## 26 26 1 Premed 2 4 0 0 1 ## 27 27 1 Premed 2 4 1 2 0 ## 28 28 1 Premed 3 1 1 3 4 ## 29 29 1 Premed 1 1 0 0 3 ## 30 30 1 Premed 3 1 1 0 5 ## 31 31 1 Biology 1 1 1 1 9 ## 32 32 1 Biology 1 3 1 3 3 ## 33 33 1 Biology 1 4 1 2 4 ## 34 34 1 Biology 2 1 1 1 1 ## 35 35 1 Biology 2 1 0 0 2 ## 36 36 1 Biology 2 2 1 1 0 ## 37 37 1 Biology 2 4 1 2 3 ## 38 38 1 Biology 3 1 0 0 2 ## 39 39 1 Biology 3 2 0 1 8 ## 40 40 1 Biology 3 4 0 0 4 ## 41 41 1 Biology 3 4 0 0 4 ## 42 42 1 Sociology 2 1 0 0 0 ## 43 43 1 Sociology 2 2 1 0 3 ## 44 44 1 Sociology 2 4 0 0 2 ## 45 45 1 Sociology 2 4 0 0 2 ## 46 46 1 Sociology 2 4 0 1 1 ## 47 47 1 Sociology 3 1 0 0 1 ## 48 48 1 Sociology 3 1 1 1 5 ## 49 49 1 Sociology 3 2 1 1 5 ## 50 50 1 Sociology 3 3 0 0 4 ## 51 51 1 Sociology 3 3 0 0 4 ## 52 52 1 Sociology 3 4 1 0 10 ## 53 53 1 Sociology 3 4 0 0 7 ## 54 54 1 Economics 1 3 1 1 3 ## 55 55 1 Economics 1 3 1 2 4 ## 56 56 1 Economics 2 4 0 1 2 ## 57 57 1 Economics 3 1 0 0 2 ## 58 58 2 Psychology 1 1 1 1 1 ## 59 59 2 Psychology 2 1 0 0 7 ## 60 60 2 Psychology 1 2 0 1 5 ## 61 61 2 Psychology 1 3 1 1 5 ## 62 62 2 Psychology 1 3 0 0 1 ## 63 63 2 Psychology 1 3 0 0 3 ## 64 64 2 Psychology 1 4 0 0 3 ## 65 65 2 Psychology 1 4 0 1 5 ## 66 66 2 Psychology 2 2 0 0 1 ## 67 67 2 Psychology 3 1 0 0 0 ## 68 68 2 Premed 1 2 0 0 5 ## 69 69 2 Premed 1 3 0 0 6 ## 70 70 2 Premed 1 3 1 1 3 ## 71 71 2 Premed 1 4 1 1 5 ## 72 72 2 Premed 1 4 1 2 4 ## 73 73 2 Premed 2 3 0 0 0 ## 74 74 2 Premed 2 3 0 0 2 ## 75 75 2 Premed 2 4 0 0 2 ## 76 76 2 Premed 3 1 0 0 3 ## 77 77 2 Premed 1 2 1 1 1 ## 78 78 2 Premed 3 2 0 0 1 ## 79 79 2 Premed 3 3 1 1 0 ## 80 80 2 Premed 3 3 0 0 2 ## 81 81 2 Premed 3 4 0 1 10 ## 82 82 2 Biology 1 1 0 0 3 ## 83 83 2 Biology 1 1 0 1 3 ## 84 84 2 Biology 1 1 1 1 8 ## 85 85 2 Biology 1 1 1 0 3 ## 86 86 2 Biology 3 2 0 0 3 ## 87 87 2 Biology 2 2 1 0 1 ## 88 88 2 Biology 2 3 0 0 1 ## 89 89 2 Biology 3 2 0 0 2 ## 90 90 2 Biology 3 3 0 0 8 ## 91 91 2 Biology 3 3 1 1 3 ## 92 92 2 Sociology 2 1 0 0 0 ## 93 93 2 Sociology 2 4 0 0 0 ## 94 94 2 Sociology 3 3 1 2 4 ## 95 95 2 Economics 3 3 0 0 7 ## 96 96 2 Economics 2 2 1 1 0 ## 97 97 2 Economics 2 3 0 0 0 ## 98 98 2 Economics 2 3 0 0 2 ## 99 99 2 Economics 2 4 1 1 1 ## 100 100 2 Economics 1 2 0 0 2 ## Prevmath Mathquiz Statquiz Exp_sqz Hr_base Hr_pre Hr_post Anx_base ## 1 3 43 6 7 71 68 65 17 ## 2 4 49 9 11 73 75 68 17 ## 3 1 26 8 8 69 76 72 19 ## 4 0 29 7 8 72 73 78 19 ## 5 1 31 6 6 71 83 74 26 ## 6 1 20 7 6 70 71 76 12 ## 7 2 13 3 4 71 70 66 12 ## 8 1 23 7 7 77 87 84 17 ## 9 1 38 8 7 73 72 67 20 ## 10 0 NA 7 6 78 76 74 20 ## 11 1 29 8 10 74 72 73 21 ## 12 0 32 8 7 73 74 74 32 ## 13 0 18 1 3 73 76 78 19 ## 14 1 NA 5 4 72 83 77 18 ## 15 1 21 8 6 72 74 68 21 ## 16 0 NA 3 1 76 76 79 14 ## 17 1 37 8 7 68 67 74 15 ## 18 1 37 7 4 77 78 73 39 ## 19 3 32 10 9 74 74 75 20 ## 20 1 NA 7 7 74 75 73 15 ## 21 0 25 7 6 74 84 77 19 ## 22 1 22 4 3 73 71 79 18 ## 23 1 35 8 7 71 74 76 18 ## 24 3 47 8 7 75 75 71 23 ## 25 3 41 6 6 76 73 72 18 ## 26 4 26 7 6 71 76 75 14 ## 27 6 39 8 8 74 79 79 17 ## 28 2 21 7 8 78 79 73 18 ## 29 2 NA 7 9 70 63 66 18 ## 30 1 22 4 7 73 78 69 21 ## 31 1 21 8 8 75 83 73 18 ## 32 0 NA 7 6 78 76 84 24 ## 33 1 26 8 7 76 74 81 17 ## 34 1 20 8 9 76 69 71 17 ## 35 1 30 6 9 69 69 64 22 ## 36 0 40 8 9 77 79 74 21 ## 37 1 35 8 7 78 73 78 19 ## 38 0 10 7 8 74 72 72 15 ## 39 1 35 6 5 71 70 75 20 ## 40 1 44 6 4 67 67 73 12 ## 41 1 26 7 5 77 78 78 21 ## 42 1 NA 9 11 71 72 67 20 ## 43 1 15 3 4 76 79 71 19 ## 44 1 42 7 7 69 70 64 13 ## 45 1 33 8 7 72 64 68 20 ## 46 0 29 6 6 72 79 76 22 ## 47 1 39 7 8 71 63 66 15 ## 48 0 38 8 8 71 82 79 20 ## 49 1 NA 5 4 75 76 70 16 ## 50 1 24 7 6 74 76 75 22 ## 51 1 NA 7 5 74 74 69 30 ## 52 0 26 5 2 78 80 80 19 ## 53 1 14 5 3 68 78 73 20 ## 54 5 45 9 9 76 79 75 15 ## 55 1 28 8 8 73 78 77 19 ## 56 2 31 8 7 74 78 82 27 ## 57 0 NA 8 9 72 67 67 16 ## 58 1 32 8 10 74 84 76 17 ## 59 0 15 3 6 73 73 71 19 ## 60 0 26 8 7 73 74 76 16 ## 61 1 26 8 7 80 82 86 18 ## 62 2 32 5 5 67 68 73 20 ## 63 0 21 8 7 65 75 69 26 ## 64 1 43 8 8 71 72 76 18 ## 65 1 NA 8 5 72 75 68 21 ## 66 4 34 9 11 70 73 65 11 ## 67 3 33 10 11 72 67 68 16 ## 68 0 30 7 7 69 70 74 18 ## 69 2 46 8 6 68 74 71 16 ## 70 2 NA 7 7 76 79 71 19 ## 71 0 33 7 4 68 71 77 18 ## 72 2 29 7 6 76 79 71 22 ## 73 4 30 9 10 69 70 74 15 ## 74 4 34 8 7 68 70 69 18 ## 75 3 32 9 8 70 62 71 17 ## 76 1 37 6 8 70 73 77 15 ## 77 2 NA 8 10 69 67 64 14 ## 78 3 31 7 8 64 74 70 22 ## 79 2 30 8 8 68 72 71 10 ## 80 2 28 5 5 71 74 67 15 ## 81 0 14 6 3 69 78 76 20 ## 82 1 9 7 8 71 69 76 13 ## 83 1 11 4 7 72 83 73 14 ## 84 1 30 6 7 76 78 71 15 ## 85 1 15 5 7 76 67 71 18 ## 86 1 32 3 5 72 70 67 15 ## 87 0 22 5 4 74 78 72 20 ## 88 4 NA 8 9 70 71 69 10 ## 89 0 25 6 7 68 70 73 16 ## 90 1 18 4 3 71 73 77 15 ## 91 1 11 4 3 72 75 76 24 ## 92 1 11 6 9 71 78 72 16 ## 93 2 37 9 9 72 71 73 17 ## 94 0 28 7 6 73 77 79 24 ## 95 2 NA 8 6 69 70 71 23 ## 96 1 33 7 9 74 73 71 17 ## 97 2 28 8 9 70 66 65 17 ## 98 2 38 9 10 65 65 69 18 ## 99 4 41 8 8 72 68 73 17 ## 100 2 39 7 7 70 70 64 17 ## Anx_pre Anx_post ## 1 22 20 ## 2 19 16 ## 3 14 15 ## 4 13 16 ## 5 30 25 ## 6 15 19 ## 7 16 17 ## 8 19 22 ## 9 14 17 ## 10 24 19 ## 11 25 22 ## 12 35 33 ## 13 23 20 ## 14 27 28 ## 15 27 22 ## 16 18 21 ## 17 19 18 ## 18 39 40 ## 19 12 18 ## 20 11 20 ## 21 27 23 ## 22 13 19 ## 23 22 25 ## 24 28 24 ## 25 24 26 ## 26 10 18 ## 27 12 16 ## 28 13 16 ## 29 12 14 ## 30 14 17 ## 31 21 23 ## 32 27 25 ## 33 26 15 ## 34 25 19 ## 35 16 18 ## 36 14 19 ## 37 12 17 ## 38 21 16 ## 39 27 22 ## 40 19 17 ## 41 15 15 ## 42 23 21 ## 43 21 17 ## 44 24 22 ## 45 14 22 ## 46 27 24 ## 47 21 13 ## 48 26 26 ## 49 18 23 ## 50 27 23 ## 51 36 32 ## 52 24 24 ## 53 29 30 ## 54 8 17 ## 55 13 18 ## 56 21 24 ## 57 20 22 ## 58 19 14 ## 59 16 16 ## 60 20 17 ## 61 27 20 ## 62 25 24 ## 63 29 23 ## 64 13 16 ## 65 26 27 ## 66 19 9 ## 67 25 15 ## 68 15 15 ## 69 10 17 ## 70 13 15 ## 71 21 19 ## 72 15 20 ## 73 21 17 ## 74 21 19 ## 75 23 19 ## 76 23 14 ## 77 17 15 ## 78 25 19 ## 79 13 15 ## 80 17 17 ## 81 25 23 ## 82 10 13 ## 83 16 15 ## 84 18 17 ## 85 12 17 ## 86 9 13 ## 87 27 18 ## 88 16 14 ## 89 20 19 ## 90 19 21 ## 91 17 21 ## 92 21 15 ## 93 11 17 ## 94 18 20 ## 95 28 24 ## 96 18 17 ## 97 12 13 ## 98 14 19 ## 99 11 18 ## 100 11 14 ``` --- # Step 3: Compute new variables/values ```r d_clean <- d_clean %>% mutate(newVar = Mathquiz/2) ``` *Note that I removed the other variables just to show you but in reality all the variables are still there ``` ## # A tibble: 100 x 2 ## Mathquiz newVar ## <dbl> <dbl> ## 1 43 21.5 ## 2 49 24.5 ## 3 26 13 ## 4 29 14.5 ## 5 31 15.5 ## 6 20 10 ## 7 13 6.5 ## 8 23 11.5 ## 9 38 19 ## 10 NA NA ## # … with 90 more rows ``` --- # Step 4: Get descriptives .pull-left[ ```r library(furniture) tableF(d_clean, Major) ``` ``` ## ## ───────────────────────────────────────── ## Major Freq CumFreq Percent CumPerc ## Psychology 29 29 29.00% 29.00% ## Premed 25 54 25.00% 54.00% ## Biology 21 75 21.00% 75.00% ## Sociology 15 90 15.00% 90.00% ## Economics 10 100 10.00% 100.00% ## ───────────────────────────────────────── ``` ] .pull-right[ ```r tableF(d_clean, Phobia) ``` ``` ## ## ───────────────────────────────────── ## Phobia Freq CumFreq Percent CumPerc ## 0 12 12 12.00% 12.00% ## 1 15 27 15.00% 27.00% ## 2 12 39 12.00% 39.00% ## 3 16 55 16.00% 55.00% ## 4 21 76 21.00% 76.00% ## 5 11 87 11.00% 87.00% ## 6 1 88 1.00% 88.00% ## 7 4 92 4.00% 92.00% ## 8 4 96 4.00% 96.00% ## 9 1 97 1.00% 97.00% ## 10 3 100 3.00% 100.00% ## ───────────────────────────────────── ``` ] --- # Step 4: Get descriptives ```r library(furniture) library(dplyr) d_clean %>% dplyr::group_by(Major) %>% table1(Mathquiz, Phobia, Coffee) ``` ``` ## Using dplyr::group_by() groups: Major ``` ``` ## ## ─────────────────────────────────────────────────────────────────── ## Major ## Psychology Premed Biology Sociology Economics ## n = 25 n = 21 n = 19 n = 12 n = 8 ## Mathquiz ## 29.6 (8.9) 31.0 (8.2) 24.2 (10.4) 28.0 (10.4) 35.4 (6.3) ## Phobia ## 3.6 (2.4) 3.0 (2.5) 3.7 (2.6) 3.2 (3.0) 1.8 (1.4) ## Coffee ## 0 17 (68%) 11 (52.4%) 10 (52.6%) 8 (66.7%) 4 (50%) ## 1 8 (32%) 10 (47.6%) 9 (47.4%) 4 (33.3%) 4 (50%) ## ─────────────────────────────────────────────────────────────────── ``` --- class: inverse, center, middle # Questions? --- class: inverse, center, middle # Next Topics ### More Data Manipulation ### Understanding Data via Figures